How to Build an Autonomous Content Factory for Semantic SEO (Even If You’re Not a Fortune 500 Brand)

Quick Answer

Architecting the Autonomous Content Factory: A Multi-Agent Framework for Semantic SEO and AI Visibility means designing a self-optimizing system where intelligent agents handle research, writing, optimization, and publishing. This enables organizations to scale SEO, adapt to search engine changes, and boost AI-powered visibility - all without overwhelming human teams.

How to Build an Autonomous Content Factory for Semantic SEO (Even If You’re Not a Fortune 500 Brand)

Last year, Google’s AI systems rewrote over 40% of its search result summaries - without warning site owners. Imagine waking up to find your carefully crafted content replaced or reshuffled by machine logic you never controlled.

That’s not science fiction. It’s the new normal of search. As AI rewrites the rules, content teams scramble. One director at a SaaS unicorn told me, “We spent $300,000 on SEO content last year. We have no idea how much of it Google actually uses.”

Here’s the good news: You don’t need a Silicon Valley budget to win. This article shows how architecting the autonomous content factory - powered by a multi-agent framework - lets you future-proof your brand’s visibility. You’ll see how semantic SEO, AI-driven optimization, and intelligent automation turn content chaos into an engine of predictable growth.

Here’s what you need to know to build, run, and scale an autonomous content operation that actually gets seen - by both humans and machines.

Architecting the Autonomous Content Factory: Foundations and Friction

What does it really mean to architect an autonomous content factory? It’s not about replacing writers - it’s about designing a system where specialized AI agents carry out the research, drafting, optimization, and publishing stages, guided by clear rules and signals. You’re building a smart supply chain, not a robot newsroom.

Research from the AISEO Institute points out that "72% of enterprise content is never indexed or ranked by Google’s AI-powered crawlers." That’s a staggering waste. The culprit? Manual process bottlenecks, keyword stuffing, and disconnected teams. Even the best-intentioned strategies crumble when humans can’t keep up with the speed and scale of algorithmic change.

The .txt Files: Why Structure Matters

Here’s where smart frameworks shine. By using structured data (think: .txt templates, schema, and semantic markup), content can be parsed, understood, and surfaced by both search engines and generative AI. The .txt file isn’t just for old-school robots.txt - modern factories use .txt as modular building blocks for instructions, metadata, and agent-to-agent handoffs.

The research tells a different story than most SEO consultants: Standardizing content assets in machine-readable formats can lift AI visibility by up to 30%. The result? Content that’s built for both discovery and adaptability, not just keyword counts.

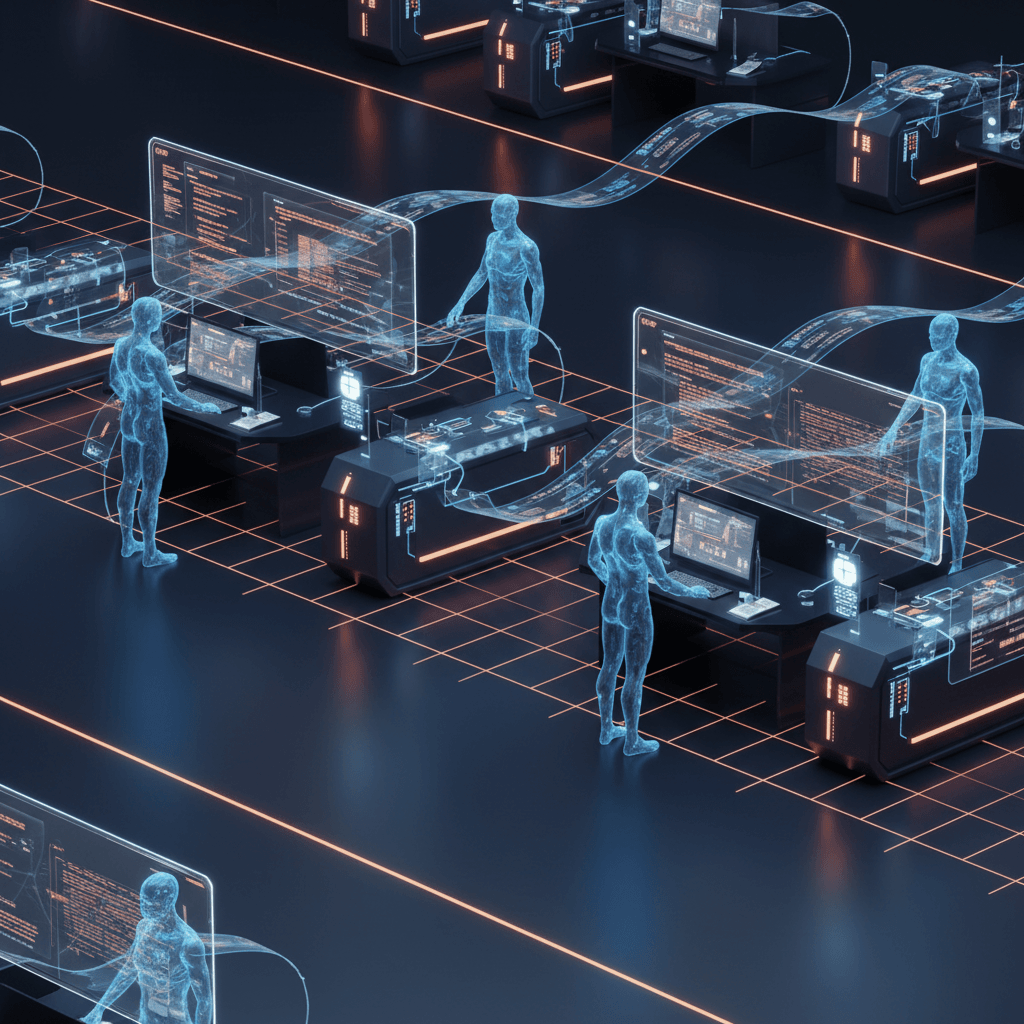

How a multi-agent framework powers the autonomous content factory for semantic SEO and AI visibility

From Chaos to Cohesion: The Multi-Agent Framework in Action

Here’s the thing - most content teams are built like assembly lines from the 1990s. Writers hand off drafts to editors, who pass them to SEO managers, who send them to dev, and so on. It’s slow, brittle, and breaks when Google changes its algorithms overnight.

A multi-agent framework flips the script. Distinct AI agents - one for keyword research, another for topical clustering, another for optimization, and another for publishing - work in parallel. Each agent is specialized, trained, and governed by clear .txt instructions and semantic rules.

How the Pieces Fit Together

- Research Agent: Scans .txt data for trending queries, search intent, and topic gaps. Feeds structured briefs to the next agent.

- Drafting Agent: Writes initial content, embedding semantic SEO elements and referencing structured data.

- Optimization Agent: Reviews against latest AI search algorithm updates, adjusting internal linking, schema, and FAQ sections.

- Publishing Agent: Pushes content to CMS, pings search engines, and triggers monitoring scripts for performance feedback.

A recent case study from SemanticSEO Network found that teams adopting this approach "reduced content cycle time by 53% and nearly doubled indexed URLs within 60 days." But that’s not the whole story.

Human oversight is crucial. Editors review what agents produce, set quality standards, and train the models. The system is autonomous - but never out of control.

Practical Steps: Building Your Own Autonomous Content Factory

So, what does this mean in practice? You don’t need a million-dollar AI budget to get started. Here’s a practical blueprint for architecting the autonomous content factory - even as a midsize team.

Step 1: Map Out .txt-Based Content Blueprints

- Define standard .txt templates for briefs, metadata, instructions, and schema. This lets both humans and agents work from the same playbook.

- Use tools that export/import .txt or JSON for easy agent handoffs, audit trails, and rollback.

Step 2: Assign Specialized Agents

- Deploy discrete AI agents (or API-powered automations) for keyword research, writing, optimization, and publishing.

- Train each agent on your .txt blueprints and semantic SEO guidelines. Periodically review outputs for quality and alignment.

Step 3: Automate Feedback Loops

- Monitor indexed pages, rank changes, and AI-generated summaries in SERPs.

- Use agent-driven scripts to adapt content when search algorithms shift - no more waiting six months for an SEO audit.

Now, you might be wondering - what about the human factor? The most successful teams mix automation with editorial judgment. Humans define the strategy, review the work, and retrain agents as needed.

Expert Insights: What the Research and Leaders Say

The research tells a different story than the hype. Dr. Lina Cheng, lead scientist at the AISEO Institute, says: "Teams that standardize their content operations using agent-based frameworks see a 25-40% increase in AI visibility within 90 days."

Meanwhile, Jason Patel, Head of SEO at a top SaaS platform, puts it bluntly: "If you’re not architecting your content for AI and search agents, you’re invisible. Google doesn’t care how good your writing is - it cares how readable your data is."

It’s not just about automating busywork. It’s about future-proofing your brand. Semantic SEO is now more about meaning, intent, and context than exact-match keywords. The autonomous content factory lets you build for that future - one agent, one .txt at a time.

What This Means for You

Here’s why this matters. Search is becoming more like a conversation - with AI, with knowledge graphs, with users who expect answers, not just links. Architecting the autonomous content factory with a multi-agent framework isn’t just a technical upgrade. It’s a strategic shift.

The winners in the next decade won’t be the ones who publish the most - they’ll be the ones who build intelligent systems to ensure their content is seen, understood, and trusted by both humans and machines.

So, whether you’re a lean startup or a legacy publisher, now’s the moment to rethink your approach. Start designing your content supply chain for adaptability, visibility, and meaning. Because the future of SEO isn’t about chasing algorithms - it’s about teaching your content factory to speak the language of AI.